Sending IR Signals to Control my TV

Quick follow-up to my prior post. Tried generating some IR codes to control my TV. Used a Radio Shack High-Output Infrared LED. Decided to tap the PWM sub-system of the PIC. Slightly complicated setup, but pretty straightforward. Set PWM on RB3/PA1 (pin 18, again) at 38.5KHz. Wrote a series of routines for sending a packet; bytes; and bits. Tried sending VOL+/VOL- pairs, but no joy. Tried receiving the IR output with the IR receiver, nada. Found some silly errors in my PWM handling (needed to arm the timer to drive PWM generation). After this, I was able to detect my generated signal :) Forward progress...But still no joy on controlling the TV. The online information I found about NEC encoding suggests the first two bytes are a device code/complemented device code. This doesn't match my 0x7B/0x1F pair, but for some reason I was suspicious of that anyway. So I modified my code to cycle through all device IDs, hoping if I hit the right one, I'd see the button effect on-screen. But still nothing. However, while running this code, the regular remote stopped working: I was seemingly overwhelming the TV receiver with my own transmission. This meant I was definitely generating the right frequency, and was transmitting signals that the TV receiver couldn't ignore (and maybe I'd reinvented the "Stop That!" remote jammer from years ago).

Looking at the online doc further, I noticed the 3rd byte is supposed to be the command code, and the 4th the complement of it. My fourth byte is listed in the table above, and feels right: button 1 is 0x01, 2 is 0x02, etc. Buy if those are the complimented codes...maybe I messed up in my interpretation of the remote's transmissions?

I found a pair of valid codes that were compliments of each other: MUTE (0x64) and INFO (0x9B). If I attempted to send either, and my bits were reversed, I'd still be sending a valid code.

Still no response from the TV. So I switched back to my original device ID (7B1F); still nothing. Finally, I complemented the codes I was sending, and...

SUCCESS!

Mute-Unmute-Mute-Unmute!

|

| Mute command received, TV muted |

| |

|

So looks like my previous analysis was complemented?

I double-checked my earlier code, and sure-enough, I used a skip-if-less-than when I should have used a skip-if-greater-than: my codes were in fact complimented!

Or, rather, my device id was complemented, and my interpretation of byte order for bytes 3 and 4 were reversed. So the correct sequence is:

0x84/ 0xE0 / Command Code/ ~Command Code

So the above table is still correct. But send the true code as the third byte, and the complemented one as the fourth.

EPILOGUE

It's a bit glitchy. Interestingly, codes that end in a 1 work better than those that end in a 0. There's a big clue somewhere in there: almost certainly my timing is a bit off, and I'm crossing bit boundaries. Too tired to think deeply on this. But the basic setup seems to work. I'm using a granularity of 100 uS, I might do better with a smaller interval. Even better would be to use timer functions to reduce the variability due to code-path timing differences.DOUBLE EPILOGUE

Just on a hunch, I tweaked my basic bit timing for a "1" bit, from an off time of 2.4 mS to 2.2 mS. Voila, much happiness! Even codes now work lol. Still some oddness, especially with sending different codes in rapid succession. More evidence of timing skew. Okay really strange observation: I put a (clear) glass in front of the LED to stop it from transmitting, but instead it seemed to make the system work even better! Something optical or multi-path going on? I can alternate channel and volume commands and it seems to do what it's supposed to. Much weirdness! OKAY FINAL TWEAK: scaled back my 0.6 mS intervals to 0.5 mS. Yay! Seems to work darn near perfectly now. Good enough for me, good proof of concept :)

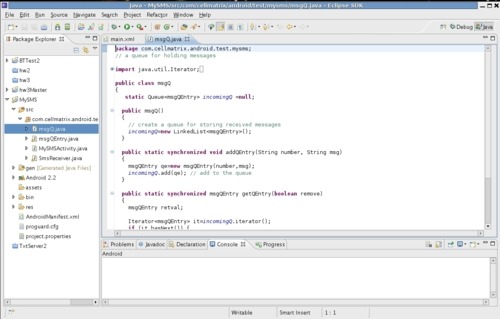

IR TRANSMISSION CODE

;

; Try sending IR codes to TV

;

; N. Macias July 2012

;

#include "P18F1320.INC"

list p=18F1320

config wdt=off, lvp=off

radix dec

EXTERN delay

udata

bitCount res 1

ourByte res 1

workingByte res 1

iterCount res 1 ; for delay100 code

code

org 0

Start:

call IRInit

mainLoop:

;movlw 0x9e ; freeze code

;call IRSend

;call delay5Sec

;goto mainLoop

movlw 0x51 ; Channel down

call IRSend

movlw 0x60

call IRSend

movlw 0x60 ; vol+

call IRSend

movlw 0x61 ; vol-

call IRSend

movlw 0x40 ; Source

call IRSend

movlw 0x40 ; Source

call IRSend

goto mainLoop

; IR initialization code

IRInit:

movlw 0x70

movwf OSCCON ; 8MHz operation

; start with output pin set to input

bsf TRISB,3 ; use pin 18 (RB3) as output to drive the IR transmitter

; we want to use the PWM feature of the PIC (P1A). This requires some setup...

; At a frequency of 38.5 KHz, we need PR2=51. and TMR2 prescale=1 (FOSC=8MHz)

; For 50% duty cycle we need to load 104. into CCPR1L:CCP1CON<5:4>

; 104. is 0001 1010 00 (10-bit binary): so set CCPR1L=0x1a and CCP1CON<5:4>=00

; set PWM period (51.)

movlw 51

movwf PR2 ; set PWM period

; set ECCP control register

movlw 0x0c ; 00 (P1A modulated) 00 (LSBs of duty cycle) 1100 (active high)

movwf CCP1CON

; set rest of PWM duty cycle (104. since not mult by 4)

movlw 0x1a

movwf CCPR1L ; MSBs of duty cycle (2 LSBs set to 00 above)

bcf ECCPAS,7 ; clear any shutdown code

; TMR2 setup

bcf PIR1,1 ; clearTMR2 int flag

bcf T2CON,0

bcf T2CON,1 ; set prescale value=1

bsf T2CON,2 ; Turn on the timer!

; To turn on PWM output, we need to:

; - wait until PIR1<1> is set (and then clear it?); and

; - clear bit 3 of TRISB

;

; to turn OFF, just set TRISB<3>

return

; Send full packet containing code (in W) through IR

; NEC coding:

; 8.55 ON, 4.275 OFF - START

; 0.55 ON, either 2.4 OFF (1) or 0.6 OFF (0) - repeat for each bit

; 0.55 ON, 35 OFF - STOP

IRSend:

movwf ourByte ; save this

; send START pulse

call IROn ; begin START pulse

movlw 86

call delay100

call IROff

movlw 43

call delay100

; send 4-byte message

movlw 0x84 ; start of address

call sendIRByte

movlw 0xE0 ; end of address

call sendIRByte

movf ourByte,w

call sendIRByte ; actual code

movf ourByte,w

comf WREG ; toggle bits

call sendIRByte ; and send actual code

; send STOP bit

call IROn

movlw 5

call delay100

call IROff

movlw 125

call delay100

movlw 125

call delay100

movlw 107

call delay100 ; total 35.7 mSec

movlw 127

call delay100 ; extra just for good measure

return

; pump out a single byte

sendIRByte:

movwf workingByte ; send this byte as 8 pulse patterns

movlw 8

movwf bitCount ; count down to 0

sibLoop:

call IROn ; turn on the beam

movlw 5

call delay100 ; ON pulse; approx 5.5 mSec

call IROff ; and turn off

movlw 5

call delay100 ; .6 mS off time (this is a 0)

movlw 16 ; prepare for additional 1.8 mS off-time

btfsc workingByte,0 ; skip next if bit=0

call delay100 ; else wait additional time

; now move to next byte

rrncf workingByte ; move next bit to LSB

decfsz bitCount

goto sibLoop ; repeat 8 times

return ; all bits sent!

; turn on PWM output

IROn:

btfss PIR1,1 ; wait for interrupt request from timer

goto IROn

bcf PIR1,1 ; clear the bit (do we need to do this?)

bcf ECCPAS,7 ; clear any shutdown code

bcf TRISB,3 ; set RB3 as an output

bsf T2CON,2 ; Turn on the timer!

return

; and turn off PWM output

IROff:

bsf TRISB,3

return

; delays 100 uS * WREG

; Based on 8MHz FOSC, that's 200 instruction cycles

delay100:

movwf iterCount

delay100Loop1: ; start of single 100 uS delay loop

movlw 65 ; actual time is (199*w) + 5

delay100Loop2:

decfsz WREG

goto delay100Loop2 ; inner loop runs 201 cycles

decfsz iterCount

goto delay100Loop1 ; repeat the whole thing

return

delay5Sec:

movlw 50 ; wait 5 seconds

delay5SecLoop:

call delay ; wait 0.1 sec

decfsz WREG ; repeat 50 times

goto delay5SecLoop

return

end